Planning for Scalability

Scale up your FME Server by increasing job throughput and optimizing job performance.

To increase the ability of FME Server to run jobs simultaneously, consider any of these approaches:

You can easily scale FME Server to support a higher volume of jobs by adding engines on the same machine as the FME Server Core. A single active Core is all you need to scale processing capacity. The FME Server Core contains a Software Load Balancer (SLB) that distributes jobs to the FME Engines. Each FME Engine can process one job at any one time, so if you have ten engines, you can run ten jobs simultaneously. If you have many simultaneous job requests, with jobs consistently in the job queue, consider adding engines to your Core machine.

Note: Adding engines to the same machine does not reduce the time a single translation takes to run. This time is dependent on the underlying hardware and the design of the workspace. Complex workspaces, big data manipulation, and large datasets take more time to run.

Although primarily considered for Increasing Job Performance (below), there may be performance benefits to Adding FME Engines on a Separate Machine from the FME Server Core.

An Active-Active Architecture provides for multiple, stand-alone FME Server installations. In addition to providing failover, this configuration distributes jobs between FME Servers via a third-party load balancer.

Use the following approaches to optimize performance of jobs run by FME Server.

Adding FME Engines on a Separate Machine provides flexibility for running jobs in close physical proximity to the data they read and write. This approach can be used within a network, or across networks that are geographically distributed.

Note: Distributing FME Engines across networks that are geographically distributed requires that the network connecting FME components is high-speed and reliable. Specifically, the FME Engines read data and configuration files from, and write log files to, the FME Server System Share location. The network cannot be occasionally connected; it must always be connected.

To ensure each job is run by the intended engine, you must use this approach in combination with Job Routing (below).

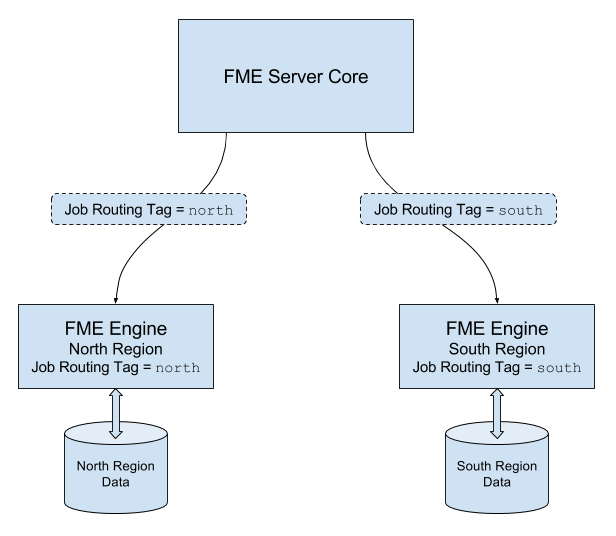

For example, consider a network with two data sources - one located in a northern region, and another located in a southern region. To run jobs efficiently, it makes sense to locate FME Engines in both regions. Jobs that are run with job routing tag north access data in the northern data store. These jobs are routed to FME Engines located in the northern region, which are configured with the same tag. Likewise, jobs that are run with job routing tag south access data in the southern data store. These jobs are routed to FME Engines located in the southern region, configured with the same tag.

Job Routing controls or spreads the work load of engines running workspaces. In a distributed environment, you may wish to run small jobs on certain engines, and larger jobs on other engines.

Or, you may have a mix of OS platforms on which certain FME formats can and cannot be run. For instance, consider an FME Server on a Linux OS. Linux cannot run some formats that may be required by your business. So, it may be necessary to have a Windows OS configured with an additional FME Server Engine.

Job routing is also used when Adding FME Engines on a Separate Machine, to route jobs to engines that are located in close physical proximity to the data they read and write.

You can set engines to process certain jobs based on the tag of the transformation request. To learn more, see Sending Jobs to Specific Engines with Job Routing.

FME Server also offers the ability to set the job priority using the "priority" directive Any jobs sent with a priority tag can be moved higher in the job queue. To learn more, see Transformation Manager.