Accesses an HDFS (Hadoop Distributed File System) to upload, download, or delete files and folders; or list the contents of a folder from an HDFS service.

Typical Uses

- Manage datasets on HDFS by uploading, downloading, and deleting files and folders

- Transfer a file's contents (such as XML, point cloud, or raster) into or out of an attribute in FME

- Read downloaded HDFS data using the FeatureReader, or upload data written by the FeatureWriter to HDFS

- Retrieve file and folder names, paths, links and other information from HDFS to use elsewhere in a workspace.

How does it work?

The HDFSConnector uses your HDFS account credentials (either via a previously defined FME web connection, or by setting up a new FME web connection right from the transformer) to access the file storage service.

Depending on your choice of actions, it will upload or download files, folders, and attributes; list information from the service; or delete items from the service. On uploads, path attributes are added to the output features. On List actions, file/folder information is added as attributes.

Examples

In this example, the HDFSConnector is used to download an Esri Geodatabase from HDFS. After creating a valid web connection to an HDFS account (which can be done right in the HDFS Account parameter), and browsing to the geodatabase folder, the HDFS path is retrieved, and a destination for the download is selected.

A FeatureReader is added to read the newly downloaded dataset. Here, the PostalAddress feature type will be further processed elsewhere in the workspace.

By executing the download here in the workspace, the geodatabase will be refreshed every time the workspace is run.

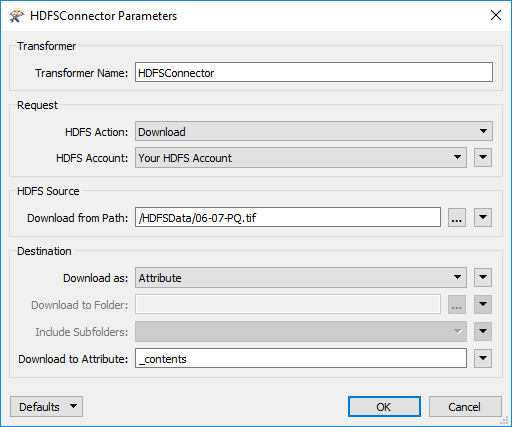

In this example portion of a workspace, the HDFSConnector is used to download a raster orthoimage from HDFS into an attribute.

The file is read from HDFS, and the contents stored as a blob attribute. Then a RasterReplacer is used to interpret the blob into a usable raster format.

The combination of these two transformers avoids having to download the image to local storage and re-read it. A similar technique can be used for point cloud files, using the PointCloudReplacer transformer.

Usage Notes

- This transformer cannot be used to directly move or copy files between different HDFS locations. However, multiple HDFSConnectors can be used to accomplish these tasks.

- The FeatureReader can access HDFS directly (without using the HDFSConnector), however, a local copy of the dataset will not be created.

Configuration

Input Ports

This transformer accepts any feature.

Output Ports

The output of this transformer will vary depending on the HDFS Action performed.

- After an Upload action, the HDFS path and ID of the file will be saved to an attribute.

- A Download action can save to a file, a folder, or an attribute.

- A Delete action has no output ports.

- A List action will output a new feature for each file/folder found in the path specified. Each of these new features will have attributes listing various pieces of information about the object.

Features that cause the operation to fail are output through this port. An fme_rejection_code attribute, having the value ERROR_DURING_PROCESSING, will be added, along with a more descriptive fme_rejection_message attribute which contains more specific details as to the reason for the failure.

Note: If a feature comes in to the HDFSConnector already having a value for fme_rejection_code, this value will be removed.

Rejected Feature Handling: can be set to either terminate the translation or continue running when it encounters a rejected feature. This setting is available both as a default FME option and as a workspace parameter.

Parameters

| HDFS Action |

The type of operation to perform. Choices include:

|

| HDFS Account |

Performing operations against an HDFS account requires the use of 1 of the 3 available authentication mechanisms (Simple, Token, Kerberos) using a Web Connection. To create an HDFS connection, click the HDFS Account drop-down box and select Add Web Connection. The connection can then be managed via Tools > FME Options > Web Connections. |

The remaining parameters available depend on the value of the Request > HDFS Action parameter. Parameters for each HDFS Action are detailed below.

HDFS Path

| Path to Delete |

The path of a file or folder on HDFS to delete. If valid credentials have been provided as a web connection, you may browse to a location. |

HDFS Source

|

Download from Path |

The HDFS path of the file/folder to download. If valid credentials have been provided as a web connection, you may browse to a location. |

Destination

| Download as |

Select whether to store the downloaded data in a File, Folder, or Attribute.

|

| Download to Folder | Specify the path to the folder that will store the downloaded file. Valid for Download as File or Folder. |

| Include Subfolders | Choose whether to download subfolders of the HDFS Source or not. Valid for Download as Folder only. |

| Download to Attribute |

Specify the attribute that will store the contents of the downloaded file. Valid for Download as Attribute only. Note: FME will attempt to convert the attribute's contents to a UTF-8 string. If this fails, the attribute's contents will be created as raw binary. To ensure that the original bytes are always preserved and never converted to UTF-8, use Download as File instead. |

HDFS Path

| List Contents at Path | The folder path on HDFS to list the contents of. If valid credentials have been provided as a web connection, you may browse to a location. |

Output Attributes

Default attribute names are provided, and may be overwritten.

|

File or Folder Name |

Specify the attribute to hold the name of an object on HDFS. |

|

File or Folder ID |

Specify the attribute to hold the ID of an object on HDFS. |

|

File or Folder Link |

Specify the attribute to hold the link to an object on HDFS. |

|

File Size |

Specify the attribute to hold the size of a file object on HDFS. |

|

Last Modified |

Specify the attribute to hold the last modified date of an object on HDFS. |

|

File or Folder Flag |

Specify the attribute to hold the type (file or folder) of an object on HDFS. |

|

Relative Path |

Specify the attribute to hold the relative path of an object on HDFS. |

|

File Last Accessed |

Specify the attribute to hold the last accessed date of a file object on HDFS. |

|

File Block Size |

Specify the attribute to hold the block size of a file object on HDFS. |

|

Owner Group |

Specify the attribute to hold the name of the group an object on HDFS belongs to. |

|

Owner User |

Specify the attribute to hold the name of the user an object on HDFS belongs to. |

|

Octal Permission |

Specify the attribute to hold the octal permission code of an object on HDFS. |

|

File Replication Count |

Specify the attribute to hold the number of replications of a file object on HDFS. |

Source

|

Upload |

The type of data to be uploaded.

When working with large objects, File is a better choice than Attribute as the data will be streamed directly from disk and not require that the object be stored entirely in memory on a feature. HDFS treats file uploads of the same name, in a specific folder, as duplicates, so you can upload multiple files with the same name to a specific folder. When wanting to upload content from a folder, it is better to upload as a folder instead of setting a fixed path and sending multiple features into the connector to upload as a file. |

|

File to Upload |

The file to be uploaded to HDFS if Upload is set to File. |

|

Folder to Upload |

The folder to be uploaded to HDFS if Upload is set to Folder. |

|

Include Subfolders |

Choose whether to upload subfolders of the Folder to Upload or not. |

|

Attribute to Upload as File |

The data to be uploaded, supplied from an attribute if Upload is set to Attribute. |

HDFS Destination

| Upload to Path | The path on HDFS to upload the source file to. To upload to the root directory, enter “/”. |

| Upload with File Name | The name of the file created from the data supplied in Attribute to Upload as File. The name must include a filename extension (for example, .txt, .jpg, .doc). |

Upload Options

| If File Exists at Destination |

Choose whether to overwrite, append, or do nothing if a file exists at the upload destination. |

| File/Directory Permission | The octal permission (UNIX format) value to set for the uploaded object. This parameter is unavailable if “Append” is chosen for the previous parameter. The object will retain its original permission setting in this case. |

Output Attributes

|

File or Folder Id |

Specify the output attribute that will store the ID of the file/folder that was just uploaded. |

| File or Folder Path |

Specify the output attribute that will store the path to the file on HDFS. |

Editing Transformer Parameters

Using a set of menu options, transformer parameters can be assigned by referencing other elements in the workspace. More advanced functions, such as an advanced editor and an arithmetic editor, are also available in some transformers. To access a menu of these options, click  beside the applicable parameter. For more information, see Transformer Parameter Menu Options.

beside the applicable parameter. For more information, see Transformer Parameter Menu Options.

Defining Values

There are several ways to define a value for use in a Transformer. The simplest is to simply type in a value or string, which can include functions of various types such as attribute references, math and string functions, and workspace parameters. There are a number of tools and shortcuts that can assist in constructing values, generally available from the drop-down context menu adjacent to the value field.

Using the Text Editor

The Text Editor provides a convenient way to construct text strings (including regular expressions) from various data sources, such as attributes, parameters, and constants, where the result is used directly inside a parameter.

Using the Arithmetic Editor

The Arithmetic Editor provides a convenient way to construct math expressions from various data sources, such as attributes, parameters, and feature functions, where the result is used directly inside a parameter.

Conditional Values

Set values depending on one or more test conditions that either pass or fail.

Parameter Condition Definition Dialog

Content

Expressions and strings can include a number of functions, characters, parameters, and more.

When setting values - whether entered directly in a parameter or constructed using one of the editors - strings and expressions containing String, Math, Date/Time or FME Feature Functions will have those functions evaluated. Therefore, the names of these functions (in the form @<function_name>) should not be used as literal string values.

| These functions manipulate and format strings. | |

|

Special Characters |

A set of control characters is available in the Text Editor. |

| Math functions are available in both editors. | |

| Date/Time Functions | Date and time functions are available in the Text Editor. |

| These operators are available in the Arithmetic Editor. | |

| These return primarily feature-specific values. | |

| FME and workspace-specific parameters may be used. | |

| Creating and Modifying User Parameters | Create your own editable parameters. |

Dialog Options - Tables

Transformers with table-style parameters have additional tools for populating and manipulating values.

|

Row Reordering

|

Enabled once you have clicked on a row item. Choices include:

|

|

Cut, Copy, and Paste

|

Enabled once you have clicked on a row item. Choices include:

Cut, copy, and paste may be used within a transformer, or between transformers. |

|

Filter

|

Start typing a string, and the matrix will only display rows matching those characters. Searches all columns. This only affects the display of attributes within the transformer - it does not alter which attributes are output. |

|

Import

|

Import populates the table with a set of new attributes read from a dataset. Specific application varies between transformers. |

|

Reset/Refresh

|

Generally resets the table to its initial state, and may provide additional options to remove invalid entries. Behavior varies between transformers. |

Note: Not all tools are available in all transformers.

Reference

|

Processing Behavior |

|

|

Feature Holding |

No |

| Dependencies | HDFS account |

| FME Licensing Level | FME Base Edition and above |

| Aliases | |

| History | Released FME 2018.0 |

FME Community

The FME Community is the place for demos, how-tos, articles, FAQs, and more. Get answers to your questions, learn from other users, and suggest, vote, and comment on new features.

Search for all results about the HDFSConnector on the FME Community.