To fix some common problems that can occur when reading or writing a Microsoft Excel file, you can first try the suggestions below:

- Ensure the Microsoft Excel file is not open in Excel or another instance of FME.

- Ensure the Microsoft Excel file is not password protected. This module does not currently support password-protected files.

- If the file is too large, it may fail to load into memory. You can try several possible workarounds:

- Divide the file into separate files (if the spreadsheet contains many worksheets that do not reference each other).

- Export the file into CSV and then read it using the CSV reader.

-

Reading .xlsb files is possible on Windows, but requires that Excel is installed locally. FME Flow does not support reading .xlsb files – this is a limitation of Excel itself.

-

Writing Excel files takes a long time to complete the translation. This may have several causes:

-

When using a template file, ensure the file is as small as possible. Large workbooks with many formats or sheets with extraneous data will still be loaded into memory which may slow down the translation.

-

When running on Windows with Excel installed, FME will ask Excel to recalculate all formula results on write when using a template file. The larger the workbook, or if many formulae are present, this will cause the translation to run longer.

-

More Information

- Reading Sheets With Different Start Rows in Dynamic Workflows

- How FME Determines Schema in Excel

- Max Features to Read

- Increasing memory use in writer

- Permissions do not work as expected

- Unique Column Names Have Numbers Appended

- Selecting Update Feature Types doesn’t refresh schema

- Schema is not Updating After Changing Source Files

- Schema does not Reflect Additional Sheets When Reading Multiple Source Files

Reading sheets with different start rows in dynamic workflows

In Excel, the header names row and data start row are considered part of the schema definition as different values for these can result in different attribute names and types being detected - this is very different from most other formats that have a clearly defined schema. In a static workflow, these start parameters are saved individually per feature type which allows FME to reliably read each unique sheet or named range. In a dynamic workflow (merged feature type), only one set of parameters is saved and are applied to every sheet and named range found in the file. This will cause issues for workbooks where the sheets do not all start on the same row, such as starting on incorrect rows or incorrectly detecting the column/attribute names in a sheet. This is a limitation of how the Excel Reader is called by the core of FME and applies to both sheets and named ranges. Part of our workarounds in the past was to attempt to match the “Configuration Dataset” path name - the file that was used when the Reader Parameters window was opened during the initial reader creation or reader update. This method is fragile, as publishing workspaces to FME Flow would very likely change the input file path and the saved path would no longer match. Options existed to try and detect header and start rows in these cases, effectively asking the reader to “guess”, and for as many times as the read would “just work” there were as many cases where it did not work correctly.

Where possible, we recommend using static workflows, especially for workbooks that have more than one set of header and start rows. If the sheets of interest will always have the same start rows and sheet names, the actual file being read can be changed by connecting the reader dataset to a user parameter if needed.

For best results in dynamic workflows, use one reader per set of sheets that share header and data start rows and use a feature type filter on the merged feature type to remove sheets that do not match. This method may result in additional readers being required to read all the sheets of interest, but it will produce more consistent results.

How FME determines schema in Excel

In most other readers, attribute names and types are fairly straightforward to obtain. Relational databases and Esri Shape files, for example, contain metadata that definitively determines what the attributes are called and their types. Excel does not contain such information and the reader is left to attempt to determine that information on its own which is why the Reader Parameters window is more complex than most other formats. The Excel Reader relies on the user to provide some context, specifically where to look for header rows and subsequently the data start or range.

Lacking that information, the reader is forced to make some assumptions about the layout of excel data: the first row with any cell values is the Field Names row that defines the attribute names and following rows contain data that will be read as features. Blank values in the Field Names row that later contain values take the column name as the attribute name. The reader will continue to read features until the last row of the range whose cells are not empty has been read. By default, up to 1000 rows will be read to determine the type of the column - if all have either empty or numeric values that are whole numbers, an integer type is assumed. If one value in such a column has a decimal portion, a floating point type will be taken instead. If a column contains a non-numeric value at any point, the column is treated as a string type instead.

If an Excel table has multiple header rows, select the one with the most specific names as the Field Names row.

Max Features to Read

The schema is determined either through Feature Type parameters or by scanning x features of the feature type, where x is defined by the Max Features to Read parameter in the FME Workbench Navigator. The schema is useful for defining the schema which may be used by writers when generating a workspace, but it is ignored on read.

Therefore, features beyond the Max Features to Read might return values that do NOT comply with the schema. (If this is an issue, you can re-add a reader with a larger value for Max Features to Read or manually edit the attribute types in the Attributes tab of the parameters dialog).

Permissions do not work as expected

If permissions do not seem to be working correctly – for example, you cannot delete columns in generated Excel worksheets despite setting the Delete Unlocked Columns permission – your cells may still be locked (since this is the default for all cells in new Excel worksheets).

For more information, please refer to Locked Versus Unlocked.

Unique Column Names Have Numbers Appended

The Excel format uses special “meta attributes” to represent metadata associated with a particular value – the ones used in reading the cell formatting (<attrName>.format), comments (<attrName>.comment), hyperlinks (<attrName>.hyperlink), and formulas (<attrName>.formula).

These are special reserved names (similar to format attributes), but they can exist on a per-attribute basis and they have special meaning when processing, even when not enabled in the reader options. The Excel Reader and Writer interpret these attribute names in specific ways, and so in the rare case one of these is the name of a column in user data, that column name is renamed to prevent mishandling as though another attribute with the same name already exists.

For example, a workbook containing the columns User, Date, and User.comment will produce the attributes User, Date, and User.comment00. This is to prevent the reader from interpreting the User.comment column as one of the special meta attributes and checking the User column cells for comment values instead. Likewise on the writing side, a User.comment would cause the writer to attempt to write the column’s values as comments on the User cells instead of recording them as a separate column. This is why you should avoid removing the numeric suffix in a workspace before writing. The same would be true for a Date.format column in the worksheet, except its values would cause the writer to attempt to use the value to create a cell style instead.

Currently there are four suffixes that cannot be used at the end of user attributes in Excel (corresponding to the four meta attribute types available):

-

.comment

-

.format

-

.formula

-

.hyperlink

The reader will attempt to correct for this by appending a numeric value to the column name to ensure uniqueness and correct behavior. The writer is unable to distinguish between meta attributes from their intended source and user attributes with these suffixes that should be written as a regular column value; thus, all are treated as the meta attribute with their particular functionality.

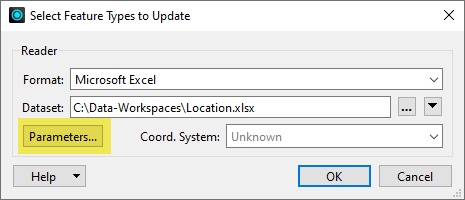

Selecting Update Feature Types doesn’t refresh schema

If Update Feature Types does not refresh the schema, open the Parameters dialog while in the Update dialog. This will trigger a new read of schema.

Schema is not updating after changing source files

Excel is an “Unstructured data format”, meaning that there is no definitive way to determine schema. Another example of this type of format is CSV, which usually starts with a header row but not always and there is no established way to indicate that it either does or does not.

For Excel, we rely on the user to provide some context so we can read the data correctly. Historically, scanning for Excel schema was very slow, so we cached the schema along with the path of the file that the schema was generated from so that on later reader edits they would not have to wait for the reader to fully rescan the file. This allows the read operation to proceed without scanning the file and complete more quickly. This has the side-effect of “locking in” schema unless the reader parameters window is reopened, forcing a re-scan of the file.

If your source workbook has had edits made to the field names row, these changes will not be updated until you open the Reader Parameters dialog. In some situations, the schema will be scanned – by the Schema Reader or when using a Merged Feature type. The merged feature type still saves the original schema, but allows new column names to be added at runtime.

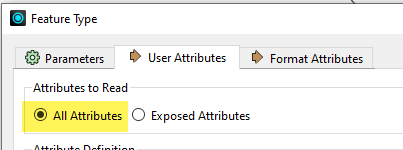

If a column has been renamed in such a way, the reader will still produce the old attribute name with null or missing data, and produce the new attribute name with the data. With individual feature types, it is possible to have the reader detect new attribute names by setting the Attributes to Read option in the User Attributes feature type dialog to All Attributes:

Note that the Feature Reader is not a merged feature type and will function identically to a reader using individual feature types in this regard.

Schema does not reflect additional sheets when reading multiple source files

When setting up the feature types and attribute schema in the reader parameters window, only the first file is used for feature types and previews to minimize complexity for users. Since Excel may require explicitly specifying arguments that determine how FME interprets the file to create schema, specifically the header row and read ranges, it assumes that all following files will have a similar structure. Since it is possible to deselect sheets and named ranges to read, it is assumed that only the schemas that have been explicitly specified by the user are “of interest” and should be read. When the Reader Parameters window is closed, the configuration is saved as the definitive schema for all files. Any feature types not in this schema are ignored, including sheet names that may exist in additional files that were not present in the first file.

If the Reader Parameters window is not opened when creating a new reader, there is no definitive schema saved and FME will do its best to determine schema. This process is described in the section “How FME Determines Schema in Excel”. This allows all files selected to be read to contribute to schema during the addition of the reader to the workspace, but will not allow you to specify header rows or cell ranges which may not work well with all Excel files.

It is important to note that one reader will treat all its dataset files the same – whether that is with a definitive schema set, or whether it must attempt to create a schema automatically. This includes the header row and cell range settings per feature type. If different header row or cell ranges are required per file, separate reader instances are required to correctly handle them. If the header rows per feature type are identical across all files, it is possible to read all sheet names in all files if the first file also has all the sheet names of interest. For example, if you have Book1 with sheets A and B, Book2 with sheets A and C, and want to read sheets A, B, and C with a single reader, you can set up the reader with Book3 as the first file, and add empty sheets named A, B, and C to it, taking care to specify the correct header row and cell range that will be applied to both Book1 and Book2 to read features. Alternatively, you can use Book1 to specify the header row and cell range and instead select ‘Single Merged Feature Type’ and have sheets A, B, and C read at the cost of having all columns read as attributes.

Increasing memory use in writer

When repeatedly writing to the same output file(s) with either of the Drop or Truncate Existing Sheets/Named Ranges options, process memory usage may steadily increase over time until the point where the FME translation crashes. The crash is accompanied by a log message similar to:

|

Failed to free sufficient memory to reach the process usage limit. To improve stability and performance please increase the memory available to FME. Available memory can be increased by adding physical RAM, increasing swap space, or closing other applications. Excel Writer: Failed to open the dataset 'myfile.xlsx'. Error message was 'ok' XLSXW writer: A fatal error has occurred. Check the logfile above for details |

This is a symptom of the Excel shared string table growing larger to the point where it no longer fits into the memory available to FME. This is a known issue in the library we use to read Excel files, and we are attempting to find a solution that does not impact writer performance. In the meantime, there are two potential solutions to the issue:

- Recommended Solution – The recommended solution is to use the Template File writer parameter, which will make a copy of the template file to write data to each time the workspace is run. (Using the Template File parameter requires setting the Overwrite Existing File parameter to Yes.) Each new set of values will not be orphaned in the string table and the process memory usage will be minimized; output files will not grow indefinitely after repeated translations.

- Alternate Solution – Alternatively, opening the file with Excel and then resaving the file will remove unused table entries and return it to a minimum size. However, this operation may not be automatable and, depending on the size of the file, may take considerable time.