To define a new connection from the Connection parameter in a Databricks format: Select Add Database Connection, and scroll to Databricks.

See database-specific parameters below, as well as the section Adding a Database Connection in a workspace in

About Database Connections |

|---|

Database formats include a Database Connection parameter that defines and stores authentication information. For general information about sharing database connections, please see Note that Database Connection parameters differ slightly, depending on context and/or database format. |

Connection From the Connection parameter in a database format, you can do one of the following: Select an existing, previously defined connection. See the section Reusing a Database Connection in Using Database Connections Select Add Database Connection to define a new connection. See database-specific parameters below, as well as the section Adding a Database Connection in a Workspace in Using Database Connections The new connection can be made visible only to the current user, or can be shared among multiple users. |

Databricks Credentials

Server Hostname: The URL of the Databricks workspace. This will take the format https://<workspace_id>.cloud.databricks.com/ or https://adb-<id>.azuredatabricks.net/.

Cluster ID: The ID of the cluster to run Databricks commands with. This cluster should be configured with access to the cloud storage location specified in the writer parameters.

Authentication Method: Personal Access Token (default) or Databricks Sign In. Select whether to authenticate Databricks access with either a Personal Access Token or Username and Password.

Username: Used only when the Authentication Method parameter is set to Databricks Sign In. The Databricks username to use.

Password: Used only when the Authentication Method parameter is set to Databricks Sign In. The password of the Databricks account.

Generated Token Lifetime (Days): Used only when the Authentication Method parameter is set to Databricks Sign In. How long the generated token will be valid for. If this parameter is left blank, the generated Personal Access Token will not have an expiry date.

Personal Access Token: Used only when the Authentication Method parameter is set to Personal Access Token. A valid Personal Access Token which can be used to authenticate access to the Databricks workspace.

Catalog: The catalog to write to. Each writer can only write to a single catalog. Click the [...] button to see a list of accessible catalogs in the workspace.

Cloud Upload Credentials

Cloud Storage Type : Amazon S3 | Azure Data Lake Gen 2 (no default value)

Select the form of cloud storage to use as a staging area. The Databricks cluster selected should be configured with its own access to this location. The cloud storage credentials provided here will strictly be used by FME to upload data to the cloud storage location and will not be passed through in any Databricks commands.

S3 Connection: An AWS web connection containing access key credentials for an AWS bucket.

S3 Bucket: The name of an S3 bucket to upload staging files to. If the Amazon S3 FME Package is installed in Workbench, clicking the browse [...] button will display a list of buckets. Select only the buckets that are accessible by the Databricks workspace.

Cloud Authentication Method: Web Connection (default) or Microsoft SAS

Whether to provide Azure credentials through a Web Connection containing a shared access key or a Microsoft SAS token.

Azure Storage Connection: An Azure Storage Web connection containing an Azure storage account name and account shared key.

Azure Storage Account: The name of the Azure storage account to use.

Shared Access Signature (SAS): A valid Azure SAS token.

Azure Data Lake Container: The name of the Azure Data Lake container. If the Azure Storage Connector FME Package is installed in Workbench, clicking the browse [...] button will display a list of containers. Select only the containers that are accessible by the Databricks workspace.

Advanced

How many features to include in each write request. If this value is not specified, the default is 1000.

This parameter allows for the execution of SQL statements before writing to a table. For example, it may be necessary to clean up a table before attempting to write to it. The statements will be executed only when the first feature arrives at the writer.

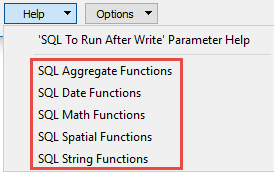

For detailed information about SQL functions, click the corresponding menu item in the .

Available menu options depend on the format.

Multiple SQL commands can be delimited by a character specified using the FME_SQL_DELIMITER directive, embedded at the beginning of the SQL block. The single character following this directive will be used to split the SQL block into SQL statements, which will then be sent to the database for execution. Note: Include a space before the character.

For example:

FME_SQL_DELIMITER ; DELETE FROM instructors ; DELETE FROM people WHERE LastName='Doe' AND FirstName='John'

Multiple delimiters are not allowed and the delimiter character will be stripped before being sent to the database.

Any errors occurring during the execution of these SQL statements will normally terminate the reader or writer (depending on where the SQL statement is executed) with an error. If the specified statement is preceded by a hyphen (“-”), such errors are ignored.

This parameter allows for the execution of SQL statements after a set of tables has been written. For example, it may be necessary to clean up a temporary view after creating it.

For detailed information about SQL functions, click the corresponding menu item in the .

Available menu options depend on the format.

Multiple SQL commands can be delimited by a character specified using the FME_SQL_DELIMITER directive, embedded at the beginning of the SQL block. The single character following this directive will be used to split the SQL block into SQL statements, which will then be sent to the database for execution. Note: Include a space before the character.

For example:

FME_SQL_DELIMITER ; DELETE FROM instructors ; DELETE FROM people WHERE LastName='Doe' AND FirstName='John'

Multiple delimiters are not allowed and the delimiter character will be stripped before being sent to the database.

Any errors occurring during the execution of these SQL statements will normally terminate the reader or writer (depending on where the SQL statement is executed) with an error. If the specified statement is preceded by a hyphen (“-”), such errors are ignored.